Summary of What We Owe The Future

Published 20 January 2023

Back to writingContents (click to toggle)

13,267 words • 67 min read

This is a summary for a popular audience of the book What We Owe The Future, by William MacAskill. The ‘overall summary’ section is a 5–10 minute read, and the remaining sections summarise individual chapters in more detail. Although the text hews lazily close to the source in many cases, I take responsibility for any inaccuracies or misinterpretations — this isn’t an official summary or anything like that.

Overall summary

We live at a pivotal time. First, we might be living only at the very beginning of humanity’s entire history. Second: the entire future, and the fortunes of all those people who could live there, could hinge on decisions we make today.

What We Owe The Future is about an idea called longtermism: the view that we should be doing much more to protect the interests of future generations. In other words, improving the prospects of all future people — over even millions of years — is a key moral priority of our time.

From this perspective, we shouldn’t only focus on reversing climate change or ending pandemics. We should try to help ensure that civilization would rebound if it collapsed; to prevent the end of moral progress; and to prepare for a world where the smartest people may be digital, not human.

Imagine every human standing in a long succession: from the first ever homo sapiens emerging from the Great Rift Valley, to the last person in this continuous history to ever live. Now imagine compressing all these human lives into a single one. We can ask: where in that life do we stand? We can’t know for sure. But here’s a clue: suppose humanity lasted only a tenth as long as the typical mammalian species, and world population fell to a tenth of its current size. Even then, more than 99% of this life would lie in the future. Scaled down to a single typical life, humanity today would be just 6 months old.

But humanity is no typical mammal. We might well survive even longer than that; for hundreds of millions of years until the Earth is no longer habitable, or far beyond. If that’s the case, then humanity has just been born; just seeing light for the first time.

Knowing how big the future could be, are there ways we can help make sure it goes well? And if so, should we care? These are the central questions of longtermism. This book represents over a decade’s worth of full-time work aimed at answering them.

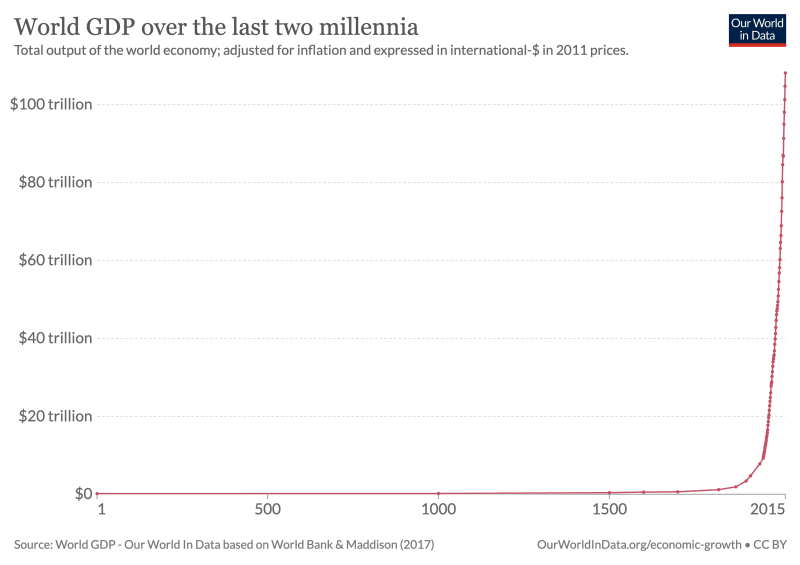

The book comes up with some striking answers. First, it argues that we are living at a moment of remarkable importance. One major line of argument notes how present-day rates of change and growth are unprecedentedly high. From 10,000 BC onwards, it took millennia for the world economy to double in size; but (sidenote: See Our World in Data’s graph showing world GDP over the last two millennia. ). We know this rate of growth cannot continue indefinitely. That means that either humanity must begin to stagnate; or else soon begin its biggest growth spurt ever. Plus, when we consider some of the causes and consequences of this growth — like fossil-fuel power, nuclear weapons, man-made pathogens, and advanced artificial intelligence — we see that these very technologies have the power to alter the course of the future, depending on how they’re built and managed.

). We know this rate of growth cannot continue indefinitely. That means that either humanity must begin to stagnate; or else soon begin its biggest growth spurt ever. Plus, when we consider some of the causes and consequences of this growth — like fossil-fuel power, nuclear weapons, man-made pathogens, and advanced artificial intelligence — we see that these very technologies have the power to alter the course of the future, depending on how they’re built and managed.

A second reason for thinking we live at an unusually influential time comes from history: from case studies of how unassuming figures, finding themselves in moments of upheaval and plasticity, shaped the values that guide the future. Nothing illustrates this better than the story of abolitionism. It’s natural to think that slavery was bound to end in the 19th century because of economic forces. But the records show that this isn’t so clear. In fact, were it not for the dedication and foresight of a small group of moral radicals, slavery might have remained ubiquitous to the present day.

Because values which now seem utterly unconscionable seemed so natural to our ancestors, we should expect that most of us are only dimly aware of values which our ancestors will be shocked to learn we hold. If people today can shape the values that last into our future, then we should draw inspiration from those early abolitionists and push for progress on today’s ethical frontiers.

History also teaches us how, once values are chosen, they can become locked-in for vast stretches of time. For instance, we can learn from the rise of Confucianism in the Chinese Han dynasty. At first, Confucianism was a relatively obscure school of thought. But once it became so unexpectedly influential, it remained so for over a thousand years.

But if value lock-in occurs this century, then it could last longer than ever. In fact, MacAskill argues that some set of values might soon come to determine the course of history for many thousands of years. The reason is artificial general intelligence: AI that is capable of learning as wide an array of tasks as human beings can, and which can perform them to at least the same level as human beings. We shouldn’t write this off as a fantasy. In fact, the evidence we have makes the prospect of AGI this century impossible to ignore. And, the argument goes, advanced AI could enable particular values to become locked-in. If that set of values doesn’t benefit humanity, then we will have lost the vast potential ahead of us.

Another way to lose out on our potential is for humanity to go prematurely extinct. In 1994, comet Shoemaker-Levy-9 slammed into the side of Jupiter with the force of 6 million megatons of TNT, equivalent to 600 times the world’s nuclear arsenal. Threats from asteroids to life on Earth no longer seemed so hypothetical. So, in 1998, Congress gave NASA the funding to track down more than 90% of all asteroids and comets larger than 1 kilometre within a decade. The effort was called Spaceguard, and it was an overwhelming success. Shoemarker-Levy-9 taught us that threats to humanity’s survival are real, but Spaceguard taught us that it’s possible to protect against the causes of our own extinction.

Unfortunately, asteroids do not appear to pose the largest threat of human extinction. Much more concerning is the possibility of artificial pandemics: diseases that we ourselves will design, using the tools of biotechnology. Biotechnology has recently seen breathtaking progress, and the tools of synthetic biology will soon become accessible to anyone in the world. We are fortunate that the fissile material required for nuclear weapons is difficult to manufacture, and relatively easy to track. Not so for artificial pathogens. While the tools of artificial pandemics remain relatively hard to access, we’ve already seen an embarrassing number of lab leaks and accidents. If we are going to avoid disaster in the future, we need to be far more careful.

But human extinction isn’t the only way we throw away our entire potential — civilization might instead simply collapse irrecoverably. To know how likely permanent collapse is, we need to know about the fragility of civilization, and its chances of recovery after a collapse. This book finds that humanity is capable of remarkable resilience. Consider the atomic bombing of the Japanese city of Hiroshima in 1945. The immediate destruction was enormous: 90% of the city’s buildings were at least partially incinerated or reduced to rubble. But in spite of the devastation, power was restored to Hiroshima’s rail station and port within a day, and to all homes within two months. The Ujina railway line was running the day after the attack, streetcars within three days, and water pumps within four. Today Hiroshima is a thriving city once again.

However, civilizational collapse today would be different to those of the past in a crucial respect: we have used up almost all of the most readily accessible fossil fuels at our disposal. Historically, the use of fossil fuels is almost an iron law of industrialisation. In this way, the depletion of fossil fuels might hobble our attempts to recover from collapse. That gives us an underappreciated reason for keeping coal in the ground: to give our ancestors the best shot at recovering from collapse.

However, more likely than collapse is the prospect of stagnation. History shows a long list of great flowerings of progress: the explosion of knowledge in the Islamic Golden Age centred in Baghdad, the engineering breakthroughs of the Chinese Song dynasty, or the birth of Western philosophy in ancient Greece. But all these periods were followed by sustained slowdown; even decline. As a global civilization, are we heading toward a similar fate? It’s hard to rule that possibility out. For instance, the economic data show that ideas are becoming harder to find, and demographic evidence suggest a sharp decline in fertility rates in many parts of the world.

Why would stagnation matter? Because of technologies like artificial pathogens and nuclear weapons, the next century could be the most dangerous period humanity faces for the rest of its future. A period of global stagnation would mean getting stuck in this ‘time of perils’. The idea of sustainability is often associated with trying to slow down economic growth. But if a given level of technological advancement is unsustainable, then that is not an option: in this case, it may be slowdowns in growth that are unsustainable. Our predicament could be like that of a climber, stranded on the cliff-face with the weather turning, but one big push from the summit. Just staying still is a bad idea: we might run out of energy and fall. Instead, the only option is to press on to the summit.

But what waits at that summit? And what’s so important about reaching it? Many people suspect that human extinction wouldn’t, in itself, be a bad thing. But some philosophical arguments suggest that we should care, morally, about letting many more people to flourish far into the future. These arguments suggest that failing to achieve a bright future would not be a matter of indifference; but rather a great tragedy.

And although it sounds like science fiction, that future could be astronomical in scale. Though Earth-based civilization could last for hundreds of millions of years, the stars will still be shining in trillions of years’ time, and a civilization that was spread out across many solar systems could last at least this long. But our galaxy is one of just twenty billion galaxies we could one day reach. Just as we owe our lives to the early humans who ventured beyond their homes, it could be of enormous importance to keep this future open — and one day achieve it.

The long-run future could be huge in scope, but could it actually be good? The present state of the world isn’t encouraging: most people still live on less than $7 per day, every year millions die from easily preventable diseases, and millions more are oppressed and abused. Plus, nearly 80 billion vertebrate land animals are killed for food every year, living lives of fear and suffering. We should clearly not be content with the world as it is. But MacAskill does not argue that we fight to spread this world, with all its ills, far into the future. Instead, the hope is that we can — and will — do far better. One reason to expect a better future is the simple observation that almost everyone wants to live in a better world — and as our technological capacity continues to progress, that world comes ever closer within our reach. New technologies bring new powers to right some of the past’s wrongs: to continue raising living standards for everyone, to replace the horrors of industrial animal farming with clean meat, and far beyond.

There’s still so much we don’t know about improving the long-term future. It is as if we’re setting off on a long expedition, without a map, and trying to peer through a thick fog. But there are rules of thumb we can follow: take the good actions we’re confident are good; keep our long-term options open; and try to learn more about considerations which are crucial for our decisions. Moreover, if you want to make a difference as an individual, then the most ethically important decision you will ever make is your choice of career. If you want to help positively influence the long-term future, look for important, solvable, and neglected problems to work on.

But can you really contribute to making a difference? In short: yes. Abolitionism, feminism, and environmentalism were all “merely” the aggregate of individual actions. And because still very few people are working on projects to help improve the long-run future, you shouldn’t just assume that other people already have it covered.

If this book is right, then we face a big responsibility:

Relative to everyone who could come after us, we are a tiny minority. Yet we hold the entire future in our hands.

That was a short summary of the entire book. You could stop reading here, or you could read on for summaries of each of the book’s chapters.

Chapter 1: The Case for Longtermism

The case for longtermism begins by looking back, over the entire sweep of human history. Suppose you lived, in order of birth, through the life of every past and present human being. You would be born in Africa, some 3000 centuries ago. After living through your first life, you are born again as the second-ever person, then the third, and so on. One hundred billion lives later, you are born into the life of the youngest person alive today. Looking back, how would you describe your life? What characterises humanity’s entire lifespan to date?

You would spend a fifth or your life farming, around 5% enslaved to another person, and more than 1% suffering from malaria or smallpox. You’d spend 60 billion years in religious rituals. Because of dramatic population growth, (sidenote: See Our World in Data’s interactive graph showing world population, 10,000 BCE to 2021). As you approach the end, the world begins to change faster than ever before. You invent steam engines, computers, and nuclear weapons. Your quality of life would also increase, and you would discover luxuries that kings and queens from previous lives couldn’t have imagined.

As mentioned, if humanity survived for roughly as long as a typical mammalian species, then we today are among the very first people to live.

But humanity might survive longer than that. Without significant intervention, the Earth will remain habitable for hundreds of millions of years. The last stars will form and eventually burn out over tens of trillions of years [1]. Our future could be vaster than we ever care to imagine.

But longtermism really gets off the ground when we combine the size of the future with the case that future people matter morally — more so than society seems to imply through its actions. After all, the fact that we are separated from future people by decades or millennia means their pains and joys will be no less real than our own when their turn comes. By analogy, a life is not made less morally significant — less worth saving or caring for — simply by being separated by a long spatial distance. MacAskill argues that we need to construct a moral worldview that takes seriously what’s at stake. We need a shared recognition of the moral significance of the future — one that can cut across many perspectives and affiliations. That’s the case for longtermism.

Chapter 2: You Can Shape the Course of History

Human beings have been making choices with long-term consequences for tens of thousands of years. Consider: why are there no safaris in South America? Surprisingly, the answer has little to do with the difference in physical environment between South America and Africa.

Fifty thousand years ago, our planet was home to a far greater variety of megafauna than today. (sidenote: Here’s a picture of a Glyptodont. ) — roaming South America for some 20 million years. Or the South American Megatherium, a giant ground sloth rivaling the Asian elephant in size. But around 10,000 years ago, both these species went extinct.

) — roaming South America for some 20 million years. Or the South American Megatherium, a giant ground sloth rivaling the Asian elephant in size. But around 10,000 years ago, both these species went extinct.

Changes to climate likely played some role, but the evidence suggests these mass extinctions were ultimately decided not by nature, but by human choices. Because in most cases the DNA remains we have are too degraded for “de-extinction”, hunting and killing the last of these creatures was a decision that will resonate for as long as there is life on Earth.

Controlling the natural world is not the only way our predecessors made decisions whose effects last to the present day. Consider the permanence that world-shaping ideas and institutions can take on after they are formed. The contents of the New Testament were in flux throughout the first few centuries AD. But by the 5th century, a consensus had emerged, and from that point on the New Testament has remained almost entirely fixed. Much later, the US Constitution was drafted in around four months. Yet it survives in essentially the same form today, and received only one small amendment in the past 50 years.

Decisions made in times of crisis are especially apt to survive long after the crisis subsides. In the aftermath of World War II, Korea was divided into two along the 38th parallel. Two young American officers proposed the shape of the border; working on short notice and with no expert consultation. Decades later, the line they drew feels irreversible. Yet it decides the fate of millions of people: between freedom and brutal totalitarianism.

What these examples have in common is that they began with a period of plasticity, in which the course of history is unusually malleable; followed by ‘cooling’ period, in which a few open decisions might remain fixed for centuries.

If we could travel back to these moments of plasticity, we would want to carry news of their moral weight. “Think carefully”, we could say: “millions of people are counting on you.” And if future people could visit us with similar news, we should listen closely.

In place of warnings from future people, we can do our best to build a framework for identifying which choices today could turn out to be the most consequential, on this long-term perspective. MacAskill argues that such choices should be significant, persistent, and contingent. A choice is significant if it brings about a large improvement for the time that it lasts. It is persistent if the change it brings about lasts for a very long time. And it is contingent if now is a unique opportunity to bring the change about, such that it might otherwise never get made.

Events of high significance, persistence, and contingency are extremely rare. For instance, the extinction of South American megafauna was an event of extraordinary persistence, but it likely wasn’t very contingent: if the humans to hunt the last glyptodonts had stayed home that day, another hunting trip would probably have caused the same outcome.

But when truly important events do come around, it is typically during periods of plasticity; like the drafting of the US constitution. In the remainder of this book, MacAskill argues that this dynamic of “early plasticity, later rigidity” could be true of our moment in history as a whole. The values that guide our civilization are still malleable; but they could soon ossify, constraining the course of the entire future.

Chapter 3: The Values that Guide the Future

Yet, since it has been possible to take and hold slaves, slavery has been close to ubiquitous: from the earliest agricultural civilizations of ancient Mesopotamia, Egypt, China and India; and reaching its apogee with the transatlantic slave trade. Over this period, slave traders took more than twelve million slaves from Africa to the New World. Slaves were packed into transport ships in quarters so cramped and so poorly ventilated that they sometimes suffocated to death. 1.5 million died in transit.

It’s hard to imagine how people could have believed that slavery was permissible; even part of the natural order. Yet even the most lauded moral thinkers of their times — Aristotle, Aquinas, Kant — didn’t think to object. In some cases, they wrote to justify the practise.

Nevertheless, the transatlantic slave trade was abolished, and slavery outlawed worldwide.

The first remarkable thing about abolition is that it happened at all. Surprisingly, the historical evidence suggests its success was far from guaranteed. The second remarkable feature of abolition is that such a small number of committed activists spearheaded the movement, and made good on their vision in such a short time.

Historical accounts of abolitionist activism often focus on figures like Frederick Douglass, Harriet Beecher Stowe, and William Wilberforce. But they were carrying forward a movement that had been ignited decades earlier — in the late 1600s and early 1700s — by a small number of moral radicals; Mennonites and Quakers in and around Philadelphia. (sidenote: Here’s a portrait of Lay, commissioned by Deborah Read, whose husband was another Benjamin: Franklin. )

)

Lay was born in England in 1682, and sailed to Philadelphia in the summer of 1732. A dwarf, standing at just over four feet in height, he likened himself to “little David” who killed Goliath. The story of Lay’s life is one of endless acts of courage and conviction. On one occasion, he brought a hollow bible filled with red pokeberry juice to a yearly Quaker meeting. Rising to his feet, he exclaimed:

Oh all you Negro masters who are contentedly holding your fellow creatures in a state of slavery, […] It would be as justifiable in the sight of the Almighty, who beholds and respects all nations and colours of men with an equal regard, if you should thrust a sword through their hearts as I do this book!

Before splattering the gathering with fake blood.

Remarkably, the principled abolitionism of Lay and other Quakers appears to have close to no precedent. It is difficult to find many examples of true abolitionist campaigning, at any point in history, anywhere in the world.

This suggests that abolition was a highly contingent event: if the early Quakers had not held to their principles, and had history not taken a few fortunate turns, institutionalised slavery might still be ubiquitous. It is natural to think that economic forces made it straightforwardly expedient to end slavery, but this isn’t right: at the time of abolition, slavery was enormously profitable for the British. There was a major lobby from British slave-owners well into the 1830s, who the government paid off in order to achieve emancipation. This cost 40% of the British Treasury’s annual expenditure. To finance the payments, the British government took out a £15 million loan, which was not fully paid back until 2015.

Yet, the abolition of slavery was one of the most important moral events ever. It is an example of a values change: a change in the moral attitudes and norms of a society. When values changes are (sidenote: As discussed above.), then they appear highly important on a longtermist perspective.

Why? Looking to the past, we see that such changes have had an enormous impact on the lives of billions of people. Looking to the future, if we can improve the values that guide the behaviour of generations to come, we can be pretty confident that they will take better actions, even if they’re living in a world very different from our own. Because positive values changes adapt to new circumstances, we can be unusually confident about their longterm effects compared to other kinds of intervention.

But if some change we make to society’s values would simply have happened anyway, then the long-run impact of that change is not so great. That’s why contingency matters, and why the book highlights the abolition movement.

In some ways, the evolution of values reflects the evolution of living things. The biologist Steven Jay Gould argued[2] that if we were to rewind the “tape of life”, even very slight changes in the distant past could lead to (sidenote: Here’s a more recent (2012) review: Replaying the tape of life: quantification of the predictability of evolution). This doesn’t mean biological evolution is chaotic. Far from it: species settle into niches which can then become stable for millions of years. But which niches get chosen in the first place? That looks highly contingent.

Just as some species are fitter than others, so some value systems may predominate; not because they are better for the world, but because they ‘outcompete’ others and entrench themselves.

In this way, just like long-lasting species, value systems can persist. But just like the “tape of life”, which values get chosen is often highly sensitive to a few key early decisions.

Consider our attitudes to animals. What if the industrial revolution had occurred in vegetarian-friendly India? Maybe then the enormous rise of factory farming over the last century would never have occurred. Maybe the people in that world would consider the suffering and death of tens of billions of animals every year in our world as an utter abomination.

Once we take the contingency of moral norms seriously, we can start to consider a dizzying variety of ways in which the moral beliefs of the world could have been very different.

Because values which seem utterly unconscionable to us seemed so natural to our ancestors, we might expect our ancestors will be shocked to learn about some of the values we hold today. So perhaps we should follow the example of Benjamin Lay: we should reason carefully about what moral progress we can make, and hold true to the answer — no matter how weird it may sound.

Chapter 4: Lock-in

In China in the 6th century BC, the Zhou dynasty collapsed after over 500 years of rule. This collapse also led to a vibrant era of philosophical and cultural experimentation — a Golden Age of Chinese philosophy that later scholars referred to as the period of “the Hundred Schools of Thought.” Of the “hundred” schools, there were four leading philosophies. Each competed for adherents and influence on government doctrines.

But in the early years of the Han dynasty, through a combination of luck and the skillful politicking of Confucian scholars, (sidenote:  A statue depicting Confucius.). Confucians encouraged obedience to authority, respect for one’s parents, and partiality to one’s family, rulers, and state.

A statue depicting Confucius.). Confucians encouraged obedience to authority, respect for one’s parents, and partiality to one’s family, rulers, and state.

Emperor Xuan, reigning from 74 to 48 BC, made Han dynasty China the first Confucian empire. For over a millennium, every educated person in China was required to master the Confucian canon. Still today, the Confucian emphasis on secularism and social harmony can be seen in the responses people from these countries give when asked questions like what they think is important in life, how they expect their children to behave, and what their hopes for the future are.

The rise of Confucianism illustrates the phenomenon of lock-in: Han dynasty China succeeded at locking in Confucianism for over a thousand years. This book argues that the technologies of the present century could enable lock-in of even more permanent kinds — maybe even lasting as long as civilization itself.

In China, the Hundred Schools of Thought was a period of plasticity. Like still-molten glass, during this time the philosophical culture of China could be blown into one of many shapes. Eventually, the glass cooled and set.

We are now living through the global equivalent of the Hundred Schools of Thought. Different moral worldviews are competing for power, and no single worldview has yet won out. As such, it’s still possible to alter and influence which ideas have prominence. But this long period of diversity and change might soon end. At some point this century, some set of values could come to dictate the entire course of history.

This is because of a technology we haven’t developed yet, but might develop soon: artificial general intelligence (or AGI). AGI is a system or collection of systems which can as wide an array of cognitive tasks as human beings can, and perform them to at least the same level as human beings. Achieving true AGI means building agents which are capable of making and executing complex plans (since this is a cognitive task human beings can perform). AI can already outperform humans at games like chess and go, as well as image recognition tasks. But reaching AGI would mean reckoning with machines that could hold compelling conversations, invent new technologies, and accurately understand our motivations. We might trust such systems to make strategic decisions and plans on behalf of companies.

Predicting long-run technological progress is an uncertain art, but the evidence we have suggests we might reasonably expect AGI this century. One insight comes from Ajeya Cotra, a researcher at Open Philanthropy. She compared trends in (artificial) computing power to the computing power of the brains of biological creatures. On this measure, today’s AI systems are roughly as powerful as insect brains. Crucially, however, the effective computing power of cutting-edge AI systems is increasing exponentially over time. Given extrapolations of these trends, and given our best guesses from neuroscience, Cotra found that we are likely to train AI systems that use as much computation as a human brain within the next decade, and that we’ll probably have enough computing power to build AGI by 2050.

The development of AGI would likely be a moment of monumental importance, for two reasons.

First, it could massively speed up the rate of technological progress. As AI improves, more and more kinds of human labor are replaceable by intelligent machines. When AI is fully general, it might replace a vast range of jobs. At that point, adding to the stock of labor would no longer be constrained by the slow pace of child-rearing. For that reason, the world economy could begin to grow much faster; perhaps (sidenote: At more familiar growth rates of 3% per year, doublings take just over 23 years.)

But that’s not the only way that AGI could transform growth. As well as simply increasing the size of the effective labor force, the development of AGI could enable us to automate the process of innovation and technological discovery itself. As the British mathematicians and codebreakers I. J. Good[3] and Alan Turing first suggested, this process could lead to runaway growth because of its recursive nature: automated innovation can mean building more and better machines for innovation, which themselves help build more and better machines…

Growth rates have increased 30-fold since the agricultural era. So it’s not unthinkable that they increase 10-fold further again. If they did, however, the world economy would double in size every two and a half years. And this rate of growth simply cannot be sustained for very long before there just isn’t room to grow further.

A speed-up in the rate of technological progress is the first reason why AGI would be a monumental event. The second reason is based on an AGI system’s potential longevity.

For instance: if one major power unlocks AGI-fuelled growth first, they might rapidly become more powerful than all other powers (such as countries) combined. This wouldn’t merely be an economic advantage. With much more rapid rates of technological progress, AGI could fuel a new wave of weapons of mass destruction. Over the course of mere years, an authoritarian country could wield a terrible influence over the rest of the world.

As such, AI might unlock much faster rates of economic growth and technological progress could allow a single country to become dangerously powerful.

But that’s not the only, or even the main reason to be concerned that AGI could lock-in one set of values for so long. These are scenarios in which humans control the AGI, and use AGI to lock in their values. But human control is not guaranteed. After all, if we can build AGI, it will likely not be long before we could build AI systems that far surpass human abilities across all domains, just as current AI systems are already far beyond human abilities at chess and Go.

By analogy, suppose a large group of chimpanzees appointed a much smaller group of humans to help guide their chimpanzee civilization. They would look for humans that centrally cared about chimp welfare, and they might think they’d found some. But it wouldn’t be surprising if the humans quickly outsmart the chimpanzees, and implement their own agenda — not one that is set against the chimpanzees, but rather one which is indifferent to them. The concern is that, in bringing about AGI, it will likewise implement its objective with extraordinary efficiency, no matter how good that objective is from humanity’s perspective. If AI agents did gain power over humanity, then it’s unclear how humans would recover control. Those agents instead might determine the course of civilization for billions of years to come. And it’s an open question how good or bad such a civilization would be.

But AGI is not the only way in which the world’s values might become locked-in. Consider military conquest on Earth: if one ideology gradually overpowered the entire planet, it could become entrenched indefinitely — especially given access to advanced surveillance technology, and human genetic engineering. Or think beyond Earth: if one culture made greater efforts to settle in space, or had greater ability to do so, then eventually it would dwarf any culture that chose to remain Earthbound.

Lock-in is not the stuff of science fiction. We know it is possible, because some forms of lock-in have already occurred. After all, why is there only one generally intelligent, language-using, species on the planet, rather than many? For a time, a plurality of primates and archaic humans existed that could have evolved into such a species. But Homo sapiens proliferated, and rendered its near-neighbors extinct, such as the Neanderthal. That’s lock-in: we’ll very likely remain the only generally intelligent species on Earth, for as long as we’re around at all.

Because value lock-in would determine the shape of the very long-run future, we should think seriously about what to do about it in advance. Should we lock-in our current values, given the choice? Probably not. We are almost certainly blind to major injustices and moral errors. Consider how egregious widespread practices from history, like slavery, appear to us today. Can we be confident that future generations won’t similarly look back on our world, and be appalled by some widespread crime or injustice that we are currently almost blind to? Of course not. It is extraordinarily unlikely that, of all generations across time, we are the first ones that have gotten it completely correct.

Instead, what we want to do is build what I’ll call a morally exploratory world: structuring the world so that, over time, those norms and institutions that are morally better are more likely to win out and that, over time, we converge on the best possible society. This project takes on urgency because we might well be approaching times when the values that are predominant get wholly or partially locked-in; the formation of a world government could be such a time, as could the development of AGI, as could the first serious efforts at space settlement.

In other words: the glass of the world’s values is cooling, and at some point, it might set. Whether it sets into a sculpture that is beautiful and crystalline, or mangled and misshapen, is, in significant part, up to us.

Chapter 5: Extinction

In 1994, comet Shoemaker-Levy-9 slammed into the side of Jupiter with the force of 6 million megatons of TNT, equivalent to 600 times the world’s nuclear arsenal. The comet left a scar on Jupiter 12,000 kilometers across, and made headlines across our world. Threats to Earth from asteroids no longer seemed hypothetical. So, in 1998, Congress gave NASA the funding to find more than 90% of all asteroids and comets larger than 1 kilometer within a decade. The effort was called Spaceguard.

It was an overwhelming success. We have now identified 98% of the extinction-threatening asteroids measuring at least 10 kilometers across. We now know that the risk of an extinction-level asteroid impact is at least one hundred times lower than we could previously have known.

Spaceguard showed that we are collectively capable of organizing to manage threats to humanity’s survival. A few tens of millions of dollars was enough to manage the risks from asteroids, such that we now know the overall risk is very low. But in the coming decades, we will have to deal with much greater risks. If we do not again rise to the challenge, there is an unacceptably high chance that humanity could come to an untimely end, and our entire future will be destroyed.

One of the biggest risks is posed by future pandemics. Looking to the future, the threat posed by pandemics will become far greater than it has ever been. This greater threat comes not from naturally arising pathogens, but from diseases that we ourselves will design, using the tools of biotechnology. Biotechnology has recently seen breathtaking progress: the first time we sequenced the human genome, it cost just under $100 million to do so. Just fourteen years later, sequencing a full human genome costs just under $3,000[4].

And the tools of synthetic biology are becoming ever more accessible to anyone in the world. In a sense, we are lucky that the fissile material required for nuclear weapons is so difficult to manufacture: it’s currently impossible to manufacture a nuclear weapon in your garage. Not so for artificial pathogens. In principle, viruses could soon be designed and produced with at-home kits. In fact, it’s already possible to order the genetic components of horsepox, a relative of smallpox, and assemble it at home at a cost of just $100,000. And the genetic recipe for smallpox is already freely available online.

Sadly, we have reason to believe some actors will consider weaponising advances in biotechnology. As evidence, we now know that the Soviet Union conducted a secretive bioweapons program that lasted 67 years, employing as many as 60,000 personnel at its height.

But it’s not just the malicious use of bioweapons that I’m so worried about. Catastrophe could just as well be caused by a lab escape: the accidental release of a pathogen with pandemic potential. Alarmingly, history is already full of examples. Between 1982 and 2003, there were no fewer than 29 accidental exposures in American labs licensed to work with especially dangerous pathogens. At the time of writing, many experts believe there is a reasonable chance that COVID-19 was not a naturally occurring virus that jumped from animals into humans, but rather that it escaped from a lab.

It’s difficult to rule out the possibility that engineered biology could cause human extinction. Even more clear is that humanity’s track record with this emerging weapon of mass destruction is dire: if we are going to avoid disaster in the future, (sidenote: See also Toby Ord (2021), The Precipice, Chapter 5, Section I “Pandemics”)

When considering human extinction, we should also consider the prospect of a third world war this century. In one narrative, we are living through a Long Peace, where the chance of conflict between great powers is historically low. There’s some truth to that. But experts suggest the chance of a world war this century is (sidenote: Researcher Stephen Clare estimates that “the chance of direct Great Power conflict this century is around 45%” and “the chance of a huge war as bad or worse than WWII is on the order of 10%”, in an investigation commissioned by the Forethought Institute, which MacAskill is affiliated with.). Political scientist Graham Allison suggests that, over the last 500 years, when one power grows to surpass a hegemon, as will happen with China overtaking the US, war has broken out 12 out of 16 times[5]. Reducing the risk of the next world war could therefore be one of the most important ways we can safeguard civilization this century.

If humanity became extinct and no similar species replaced us, then the significance of that extinction event would last for millions of years into the future. But isn’t it obvious that some other species would evolve to develop the abilities that make civilization possible?

Not so fast. One reason to doubt that intelligence would reemerge is based on the Fermi paradox: the paradox that, even though there are at least hundreds of millions of rocky habitable-zone planets in the galaxy, and even though our galaxy is more than old enough for interstellar civilization to spread widely across it, we see no evidence of alien life. If the galaxy is so vast, and so old, why is it not teeming with aliens? The most compelling answer is that (sidenote: For more, see Scott Alexander’s ‘SSC Journal Club: Dissolving The Fermi Paradox’.): perhaps including the transition to a species capable of using language and building a civilization.[6]

In fact, we don’t know how unlikely it was that biological evolution would produce a species that was capable of building civilization, even after mammals or primates had evolved. For all we currently know, the evolutionary step from mammals to a species capable of building civilization could have been astronomically unlikely to occur.

From a cosmic perspective, humanity is an unlikely species. Not only is Earth home to the only spark of consciousness in the universe, but we are the only species capable of wondering at this fact. That rarity imposes a certain responsibility. Now and in the coming centuries, we face risks that could permanently derail civilization or cause human extinction. If we mess this up, we mess it up forever.

Chapter 6: Collapse

If you were the Emperor of Rome in 100 AD, you would have presided over an area larger than today’s European Union. Your economy would have been complex and sophisticated, with a high degree of division of labor, a banking system, and international trade across continents. You might have expected Rome to gradually continue to grow ever richer and more technologically advanced, and its civil institutions would flourish into the future.

Above: the extent of the Roman Empire in 117 AD

This is not what happened. In the 5th century, the city of Rome was sacked twice by marauding Germanic tribes: in 410 AD by the Visigoths and in 455 AD by the Vandals. And a few decades later, the entire Western Roman Empire collapsed. Only in the early 19th Century did any European city surpass the population of Rome at its ancient peak.

In the previous chapter, we discussed the risk of human extinction, which is one way that civilization could end. But disasters that kill everyone are extreme and unprecedented. Global catastrophes that fall short of killing everyone are arguably much more likely. Might such a catastrophe bring about the permanent collapse of civilization?

The historical evidence suggests that human civilization has been surprisingly resilient to catastrophe. The last year in which the global population declined was 1918, because of the Spanish Flu pandemic. Even though World War 2 was arguably the deadliest war in history by proportion of the world population killed, it did not cause the global population to decline.

Consider the Black Death, a pandemic of the bubonic plague in the 14th century that spread across the Middle East and Europe. In all, around one tenth of the global population lost their lives. If any natural event would have brought about the collapse of civilization, we would have expected this to be it. But, despite the enormous loss of human lives and abject suffering that the Black Death caused, it did little to negatively impact European economic and technological development. European population size fully returned to its pre-pandemic levels two centuries later.

Take a more recent example: the atomic bombing of the (sidenote: John Hersey’s (1946) Hiroshima is a good resource written shortly after the bombing by a US journalist.). The immediate destruction was enormous: 90% of the city’s buildings were at least partially incinerated or reduced to rubble; 80,000 people were killed instantly, with a further 60,000 dying in the following months. The majority of the city’s population lost their lives.

But Hiroshima rebuilt. Despite the devastation, power was restored to the main rail station and port within a day, to 30% of homes within two weeks, and to all homes within two months. The Ujina railway line was running the day after the attack, there was a streetcar service running within three days, water pumps were working again within four days, the Bank of Japan, just 380 metres from the hypocenter of the blast, reopened within just two days. Roads and major commercial infrastructure were rebuilt within eighteen months. The population of Hiroshima returned to its pre-destruction population after just 12 years, and today, it is a thriving modern city of 1.2 million people.

Sometimes people claim that, because the modern world is so complex and inter-reliant, it is therefore fragile: if one strut is lost the entire structure will collapse. But this idea neglects people’s astonishing grit, adaptability and ingenuity in the face of adversity. And it neglects that interconnectedness can also be a source of mutual support and resilience.

Perhaps, however, the historical track record is a misleading guide to our resilience to future catastrophes. After all, we have no historical examples of global catastrophes killing more than 20% of the world population. But since the advent of nuclear weapons, we know that we have the capacity to cause more loss of life than that. Advanced bioweapons could be even more destructive. This raises two crucial questions: If there were a catastrophe of unprecedented severity, would society collapse? And if it did collapse, would it ever recover?

Consider a reasonable worst-case nuclear scenario, where 99% of the world population dies in the aftermath of an all-out war, leaving a global population of around 80 million. While writing the book, MacAskill commissioned the researcher Luisa Rodriguez to investigate the chance that humanity would recover from such an event. (sidenote: See Luisa’s interview on the 80,000 Hours podcast: ‘Luisa Rodriguez on why global catastrophes seem unlikely to kill us all’)

A 99% loss of life would be devastating. But consider the last time the population was at 80 million people, which was very roughly in 2000 BC. At this time, even though global civilization was much less technologically sophisticated than today, it was not on the brink of collapse. And there are ways in which a post-catastrophe world would be better off compared to 2000 BC — because much of the physical infrastructure like buildings, tools and machines would remain usable, and these could be used after the catastrophe. Similarly, most knowledge would be preserved, in the minds of those still alive, in digital storage, and in libraries (there are after all 2.6 million libraries in the world).

On the other hand, the world today is afflicted by major anthropogenic climate change. Could climate change cause global civilization to collapse? And are we considerably less likely to recover in a climate disrupted by human emissions?

Even here, we have reasons for optimism. Thanks in part to youth activism, attention towards climate change has increased significantly, and several key players have made ambitious pledges, most notably with China planning to reach zero emissions by 2060, and the EU by 2050.

But we shouldn’t get complacent. We should at least contemplate the worst-case climate scenarios, where we eventually burn through all recoverable fossil fuels. Our best models indicate this would lead to warming of 7–9.5 degrees. Fortunately, it turns out that it’s very hard to see how even this scenario could directly cause human extinction.

But when we’re considering our chances of recovering from a collapse, it seems like the most crucial fact about our current use of fossil fuels is not in fact the climate impacts. Rather, it’s the sheer fact that we’re using up a renewable resource which proved a critical fuel for industrialisation, and may not have a convenient substitute for bootstrapping industry again. In fact, the use of fossil fuels is almost an iron historical law of industrialisation. So it is plausible that running out of easily accessible fossil fuels could hobble our attempts to recover from collapse.

This suggests an under-discussed reason for keeping (sidenote: The most easily accessible fossil fuel without advanced tools.) in the ground: not just to avert climate change, but also to improve the prospects for our ancestors to recover from a civilizational collapse.

Despite some of the reasons for optimism mentioned in this section, the chance of the end of civilization this century — via extinction or permanent collapse — looks far too high for us to be comfortable with. A probability of at least 1% seems reasonable. But even if you thought it is only a one in a thousand chance, the risk to humanity this century would still be ten times higher than the risk of you dying this year in a car crash.

Chapter 7: Stagnation

In the 11th century, the world’s center of scientific progress was not London, or Tokyo, but Baghdad; during an era known as the Islamic Golden Age. Indeed, translated scientific works from the medieval Islamic world are believed to have played a central role in fueling the Renaissance and Scientific Revolution in Europe. But the Islamic Golden Age did not last: by the 12th century AD, the rate of scientific progress slowed considerably. The Islamic Golden age is one example of what is known as an efflorescence: a short-lived period of technological, institutional, or economic advancement in one culture or country through history. The flourishing of thought in ancient Greece, or of engineering innovation in Song China (10th to 13th century), might count as another example. These efflorescences too were all followed by periods of stagnation or decline.

Our current era is different: we’re growing faster than before, and we’re more interconnected than ever. But will this continue indefinitely? Previously, we considered the possibility that AI could bring about even faster technological progress than we’ve seen to date. In this chapter I’ll consider the opposite possibility. Perhaps future historians will look back on our era just as a great efflorescence, which, like other efflorescences before us, was followed by stagnation. My concern here is not just with a slowdown in innovation, but with a near halt to growth and a plateauing of our level of technological advancement.

Is technological progress already slowing down? Some of the evidence suggests it might be. In a landmark report called “Are ideas getting harder to find?”, economists from Stanford and LSE noticed a pattern in progress across all kinds of industries and firms. Progress in almost all areas of research, they found, is becoming exponentially more difficult. As we discover the most obvious ideas in science and technology, we pick all the ‘low-hanging fruit’ — and what’s left behind is increasingly out of reach.

Over the past century, we’ve seen relatively steady, though slowing, technological progress. But we’ve only been able to sustain this progress by throwing exponentially more researchers and engineers at the problem. In the US, there are over 20 times more researchers than there were in the 1930s. The number of scientists in the world is doubling every 18 years, and it’s plausible that around 90% of all scientists that have ever lived are alive today.

The trend of an ever-growing population seems set to stall, too — and thus so does the stock of potential scientists and researchers who could reverse the trend. Indeed, the UN projects world population will plateau by 2100[7]. In the long run, it’s plausible that the global population might shrink considerably. At a fertility rate of 1.5 children per woman (roughly the average in Europe), within 500 years the world population would fall from 10 billion to below 100 million. And it’s not at all clear how we might significantly reverse stagnation, through redoubling our spending on research, or through trying to reverse this decline in fertility rates. Overall, it seems at least conceivable that, after this century, technological progress slows to a near-halt.

If we’re running into a period of prolonged stagnation, why might that matter for the very long-run future? It could have two major effects: on future values, and on the probability of civilization’s survival.

Consider how the world’s values might change. On one perspective, stagnation could be a very good thing. Perhaps we should expect gradual moral progress over time, progressing slower than technology now does. If that’s what’s going on, then the values that would guide the future after a long period of stagnation would therefore be better than the values that guide the world today. An alternative perspective is to see the values that are predominant in the world today as being unusually good, such that the values that might emerge after a thousand-year stagnation are likely to be worse. For instance, you might think that people are in general more morally motivated in times of economic growth and technological progress. The political economist Benjamin Friedman argues that, if you look at the historical record, countries tend to make moral progress—becoming fairer, more open, and more egalitarian—during higher-growth periods.[8]

Now consider how stagnation could emperil our survival as a species. The longer we linger in a stagnated condition, the less likely it could be that we make it out. Suppose growth stalls just after we build the means to engineer deadly pathogens. If we don’t progress further, towards discovering defensive measures against those pathogens, it becomes a grim waiting game. Given enough time, a civilization-ending catastrophe would become essentially inevitable.

The idea of sustainability is often associated with trying to slow down economic growth. But if a given level of technological advancement is unsustainable, then that is not an option. In fact, it may be slowdowns in growth that are unsustainable, and only continued progress that is sustainable over the very long-run.

Chapter 8: Making happy people

There are two ways we can positively influence the far future: we can (i) improve the lives of future people, and (ii) we can ensure that future people get to live in the first place. MacAskill argues that both (i) and (ii) should be key priority of our time. But how can we be sure about (ii? How can we compare the value of future lives with people alive today?

Before his untimely death in 2017, (sidenote: Parfit was known as a brilliant philosopher and a fantastically eccentric person. In the latter half of his life, he would take every opportunity to save time on anything that wasn’t philosophy: literally running between seminars, wearing the same uniform every day, and reading philosophy while he brushed his teeth.) inaugurated several new sub-fields of moral philosophy. This chapter discusses one of these: population ethics. Population ethics is about evaluating actions that might change who and how many people are born, and/or those people’s quality of life. Reflecting on these questions, Parfit became convinced that “[w]hat now matters most is that we avoid ending human history.”[9]

Approaching population ethics, many people begin with an intuition of neutrality: that, from a moral perspective, creating new lives is a morally neutral matter. Philosopher Jan Narveson put it in slogan form: “We are in favour of making people happy, but neutral about making happy people.” Among other things, this intuition says that safeguarding humanity to survive long into the future just isn’t the kind of thing we should care strongly about. What could be wrong with that view?

The first issue is that if creating new lives really is always a morally neutral matter, then bringing about a life you know will be filled with unbearable misery should be a neutral matter. But it isn’t: it’s clearly a terrible thing to do.

In response, you could admit that it is a bad thing to bring about terrible lives, but not an intrinsically good thing to bring about good lives. However, this view also doesn’t appear to work either.

In order to see why it can’t be true, consider this fact: a typical ejaculation contains around 200 million sperm. If any of the other sperm had fertilized the egg that you developed from, then you would not have been born. Therefore, any event that affected the schedules of your biological mother and father on the day that you were conceived, even if only by a tiny amount — like if they just missed the subway on their way home from work — would have had the result that you wouldn’t have been born.

Suppose, for one year, you decide to take the bus instead of driving. In doing so, you’ll impact the schedules of tens of thousands of people each over hundreds of days, because you’ll subtly alter the flow of traffic everywhere you would have driven in your car. Some of those people will have sex and conceive a child later in that day. Therefore, you will have changed the timing of that conception, changed which sperm met the egg, and in turn changed who was born. That different person will then impact the schedules of millions of other people, changing what children they have, and so on, in a cascade of changed identities. Past a certain date, virtually everyone who is ever born will be different from who would have been born if you had chosen to drive instead, and the entire course of the future will be different. Wars will be fought that would never have been fought; monuments built that would never have been built; works of literature written that would never have been written. All because of your choice to take the bus.

Every year, like clumsy gods, we radically change the course of history.

So how does this relate to the intuition of neutrality? You might think that creating people isn’t important, because what matters is that we improve people’s lives. Imagine a bill that increases fossil fuel subsidies, leaving future people with a hotter, more polluted world to inherit. You might have thought that this subsidy is wrong, because it makes those future people worse-off. But, given what I’ve just said, it should be clear that it makes no identifiable people worse-off, because the subsidy would entirely change who is and isn’t born to experience its consequences. Instead, the intuition of neutrality would struggle to say anything about the wrongness of imposing fossil fuel subsidies. And that can’t be right.

So what’s the correct view, if this intuition of neutrality faces unexpected problems?

One suggestion might be to care instead about the average quality of life in the future. Unfortunately, though philosophers agree on very few things, they all agree that this ‘average view’ is wrong. Let’s look at one of the many problems with this '‘average view’. Consider a world full of terrible lives: lives not worth living. Notice that adding 1,000 lives to that world which are all terrible, but slightly less terrible, would increase the average quality of life in that world (although it would remain very low). So the average view would say that adding terrible lives is a good thing, in this instance. Any view that positively recommends bringing about pain and suffering for no good reason cannot be right.

A natural alternative is to care not about the average quality of life, but about the total amount of wellbeing: the quality and number of lives. We might call this the ‘total’ view. On reflection, it makes sense that a world with more happy people really is a better world. Think about how much worse the world would have been if Benjamin Lay, Marie Curie, and Harriet Tubman had never been born. ‘Population’ is not some abstract quantity — if the global population were half as large, you and your loved ones may simply never have gotten to exist. As my colleague Toby Ord says: you are population too! If you think it’s a good thing that you or your friends exist, then you might reasonably think that it can be a good thing that new, future people exist.

One surprising practical upshot of this ‘more is better’ view is a moral case for space settlement. Though Earth-based civilization could last for hundreds of millions of years, the stars will still be shining in trillions of years’ time, and a civilization that was spread out across many solar systems could last at least this long. And civilization could be expansive as well as long. Our sun is just one of one hundred billion stars in the Milky Way; the Milky Way is one of just twenty billion galaxies in the affectable universe. The future of civilization could be literally astronomical in scale, and if we could achieve a flourishing society beyond Earth, then the total view would say that we should hope to achieve that potential, and bring about those flourishing future lives.

Chapter 9: Will the future be good or bad?

So far, this book has been arguing that we should aim to preserve humanity’s potential: to secure a long and flourishing future. But can we be so confident that the future can, or will, be worth saving? Will the future be good?

A useful starting point is to ask how well people’s lives are going, today. The evidence on this question is complicated, but a broadly positive story does begin to emerge. One line of evidence comes from surveys that simply ask people if they are happy. The last World Values Survey[10] was in 2014 and includes 60 countries comprising 67% of the world population. It finds that in all countries but one, more than 64% of people rate themselves as very happy or rather happy, and in almost all countries, more than 80% of people say they are happy. In several countries, reported rates of happiness are extremely high. 98% of Qataris say they are happy, 95% of Swedes, and 91% of Americans, and even in a poor country like Rwanda, 90% of people say they are happy.

On balance, the evidence suggests that most people today live lives worth living.

But there’s another question we need to know: are people getting happier? A common view is that even though the world is getting richer, people are no happier, or are even becoming less happy. In support of this view, one could point to the ‘Easterlin paradox’[11]: that, at a point in time, although higher income is correlated with greater happiness both within and across countries, over time, people and countries do not get happier as they become wealthier.

Though Easterlin’s purported paradox continues to be influential, it doesn’t appear to exist. More recent work with better data in fact supports the view that countries do get happier as they become wealthier. In a sense, this shouldn’t be surprising: poverty lies at the root of a long list of social ills, and the progress we have collectively made in the past century — more than halving extreme poverty, more than doubling literacy, reducing child mortality around five-fold — surely translated into better lives than those previous generations.

Yet, a 2015 survey of 18,000 adults[12] found that in many rich countries, fewer than 10% of respondents think the world is getting better. This pessimism is driven in part by the negative skew of news. A huge plane crash makes good for compelling news, but a long sustained decline in child mortality is not worth mentioning. Destructive events are abrupt while improvements are quiet and gradual; but abrupt events make for more compelling headlines. This leads us to focus on the bad and ignore the good, so we miss the huge improvements that are happening all around us.

The claim here is not that the we overemphasise present-day problems. Most people still live on less than $7 per day, every year millions die from easily preventable diseases, millions more are oppressed and abused, and hundreds of millions of people go hungry. This is not a world we should be content with. But there is hope, because we can be confident that progress is possible.

Yet, of course, humans are not the only creatures that matter. We’ve not yet discussed the vast majority of sentient beings on this planet: non-human animals. Here, the story to date is far more depressing. As of 2018, there are at least 79 billion vertebrate land animals killed for food every year, in addition to around 100 billion farmed fish. The suffering we inflict on these animals is difficult to overstate. Chickens, who make up the vast majority of land animals killed for food, probably suffer most. When they’re big enough to be slaughtered, most broiler chickens are hung upside-down by their legs, their heads passed through electrified water, before, finally, their throats are cut. Millions of chickens survive all of this, to finally die after they are submerged in scalding water. It seems hard to resist the conclusion that, when a factory farmed chicken, pig or fish dies, that’s the best thing to have happened to them. And humans are literally outweighed by farmed animals: land-based farmed animals have 50% more biomass than all humans. To be sure, it’s hard to know how to weigh the lives of animals against our own, in a moral sense. But clearly we shouldn’t ignore them.

Once we consider animals, the picture becomes less clear. Perhaps things aren’t as rosy as we thought. Can we salvage a rational case for optimism about the long-run future?

On balance, MacAskill argues that we should expect the future to be good. Far better, even, than many of us ever care to imagine.

The key argument for optimism about the very long-run future concerns an asymmetry in the motivations of future people: people sometimes produce good things just because the things are good, but rarely produce bad things just because they are bad. People often do things because they believe that they are good for themselves, or because they believe they are good for others, or for the world. But people rarely do things just in order to cause harm. And with increasing technological capacity comes the power to right some of the wrongs of the past: for instance, to replace the horrors of factory farming with efficiently-grown synthetic meat.

This asymmetry in motivations is clear when we think about potential pathways to the best and worst possible futures. First, consider the best possible future: civilization is full of beings with long, blissful and flourishing lives, full of artistic and scientific accomplishment, expanded across the cosmos. We can come up with ready explanations of how such a civilization might arise. A first explanation would invoke moral convergence: people in the future might have just recognised what is good, and worked to promote the good. On the other hand, it is difficult to imagine what motivations might lead us, in the fullness of time and technological sophistication, to build a dystopia for ourselves. Therefore, we have grounds for hope.

Chapter 10: What to do

We still don’t know a great deal about the future. Suppose that a highly educated person in the year 1500 set out to make the long-term future go well. The ideas that the Earth’s habitable lifespan could be a billion years, and that the universe could be so utterly enormous (yet finite and almost entirely uninhabited) would not even have been on the table. And the ideas of the scientific method, or of artificial intelligence, would have been similarly hard to imagine. In many ways, we stand before the future like this person stood before us. It is as if we’re setting off on a long expedition, without a map, and trying to peer through a thick fog.

Nonetheless, we can follow dependable rules of thumb. First, take those actions where we can be confident the action is good. Even if we have little idea what our expedition will involve, we can be confident of the value of tinder and firelighters, and first aid supplies. Second, try to increase how many options are open to us. Third, learn more. Our expedition could climb a hill in order to get a better view of the terrain, or scout out different routes ahead.

These three lessons — take robustly good actions, build up options, and learn more — can help guide us. First, some actions make the long-term future go better across a wide range of possible scenarios, such as promoting innovation in clean energy. Second, some paths keep many more options open than others, such as maintaining a diversity of cultures and political systems. Third, we can start conducting more research right now. Currently there are few attempts to make predictions longer than a decade, and almost no attempts beyond a hundred years. As a civilization, we can invest resources into doing better.

But that is general advice. But how should you choose which particular issue to work on? One area in which we can already see clear paths is biosecurity and pandemic preparedness. We know the actionable ideas, and there is plenty of philanthropic and government funding waiting to make them happen. We could be investing now in vaccine development, cheap universal diagnostics, and broad-spectrum antivirals. People who combine an entrepreneurial aptitude with domain expertise, can therefore make a big difference in this area, starting today.

You should also consider which issues are most neglected: that is, how little effort is currently being diverted to it, relative to the attention the issue deserves? Ten years ago, almost no-one was working to positively steer the trajectory of AI. But thanks to years of research and advocacy, there are now dozens or even hundreds of people working on this problem, and tens of millions of dollars are spent on it every year. Perhaps there is another overlooked technology on the horizon that poses a grave threat to the survival of civilization. Perhaps some changes to our institutions and culture are currently being entirely ignored.

Once you’ve chosen an issue, what should you do? How can you make the biggest difference over your lifetime? First, it may be a major mistake to focus on your personal consumption as the major way you can make a difference. To illustrate: the impact of choosing to reduce your carbon footprint is necessarily capped, since you can’t emit fewer than zero yearly tonnes of carbon. Instead, by donating even a fraction of your disposable income — to an organisation like the Clean Air Task Force — you can make a difference equivalent to entirely eliminating the carbon footprint of many people.

Donations aren’t the only way to do better than just cutting your consumption. You could advocate for political change through activism; spread important ideas through social media; and even consider raising the next generation through having children.

However, the most ethically important decision you will ever make will likely be your choice of career. Because of the sheer length of your career — typically around 80,000 hours — this is likely the means by which you can achieve the most positive impact.

One way you might use the years ahead of you is to spread the ideas of longtermism, and slowly build a movement of people united around a concern for a flourishing future. But we can’t assume such a movement will succeed immediately. Consider feminism. Mary Wollstonecraft is often regarded as the first modern feminist. Her seminal work A Vindication of the Rights of Woman, was published in 1792. But it was only in 1918 and 1920 that women got the right to vote in the UK and US, respectively; and only in 1971 that Switzerland did the same. And progress still remains to be made for women’s rights.

Yet, when we look at the trajectory of concern for future generations to date, we have reasons for hope. The wellbeing of future generations is increasingly becoming a topic suitable for dinner-table conversation, largely thanks to the trailblazing efforts of environmental activists. We should continue to build a movement of morally motivated people, united in concern for the entire future.

If we are focused and diligent, we can improve humanity’s prospects over the long-run future. But we should not assume this will happen automatically. There’s no inevitable arc of progress to prevent civilization from continuing indefinitely in its myopic ways, stumbling into dystopia or oblivion. We are not destined to succeed.

Out of the hundreds of thousands of years in humanity’s past, and the potentially billions of years in her future, we find ourselves at a time of extraordinary change. Now is the time that a movement of people can form and say: we believe in the rights not just of those in our country, or in our generation, or our children’s generation, but of all those people who are yet to come.

Further reading

Much has been written about What We Owe The Future since it was published. Here’s an incomplete list of reviews:

- (sidenote: This is my favourite critical review which I’ve read, and I agree with much of it.)

- What We Owe The Future Review — Kelsey Piper in Asterisk magazine

- Book Review: What We Owe The Future — Scott Alexander in Astral Codex Ten

- What We Owe The Future: A review and summary of what I learned — Michael Townsend for Giving What We Can

For more resources on longtermism in general, you could take a look at longtermism.com.

Adams, F. C., and Laughlin, G. (1997). ‘A Dying Universe: The Long- Term Fate and Evolution of Astrophysical Objects’, Reviews of Modern Physics, 69(2), 337–72 ↩︎

Gould, S. J. (1989), Wonderful life: the Burgess Shale and the nature of history ↩︎

Good, I.J. (1965), '‘Speculations Concerning the First Ultraintelligent Machine’ ↩︎

National Human Genome Research Institute (2022), DNA Sequencing Costs: Data ↩︎

Allison, G. (2015), ‘The Thucydides Trap: Are the U.S. and China Headed for War?’ ↩︎

Ord, T., Drexler, E., and Sandberg, A. (2018), ‘Dissolving the Fermi Paradox’ ↩︎

United Nations Population Division, ‘World Population Prospects’ ↩︎

Friedman, B. (2008), ‘The moral consequences of economic growth’ ↩︎

Parfit, D (2011), On What Matters: Volume Two ↩︎

Haerpfer, C., Inglehart, R., Moreno, A., Welzel, C., Kizilova, K., Diez-Medrano J., M. Lagos, P. Norris, E. Ponarin & B. Puranen et al. (eds.). 2020. World Values Survey: Round Seven – Country-Pooled Datafile. Madrid, Spain & Vienna, Austria: JD Systems Institute & WVSA Secretariat. doi.org/10.14281/18241.1 ↩︎

Easterlin, R. (1974) ‘Does Economic Growth Improve the Human Lot: Some Empirical Evidence’ ↩︎

YouGov (2015), ‘World Getting Better vs Getting Worse’ ↩︎

Back to writing